Test Driven Development

Why we write tests and how they've helped us.

January 3, 2020

Why We Write Tests

Arguably, test coverage in an application is one of the most important features. Tests serve as an implementation roadmap, improve confidence in our algorithms, and can keep our code “clean”.

Test Driven Development (TDD) also allow us to feel more confident about changing or implementing new features without the fear of bringing down the whole application.

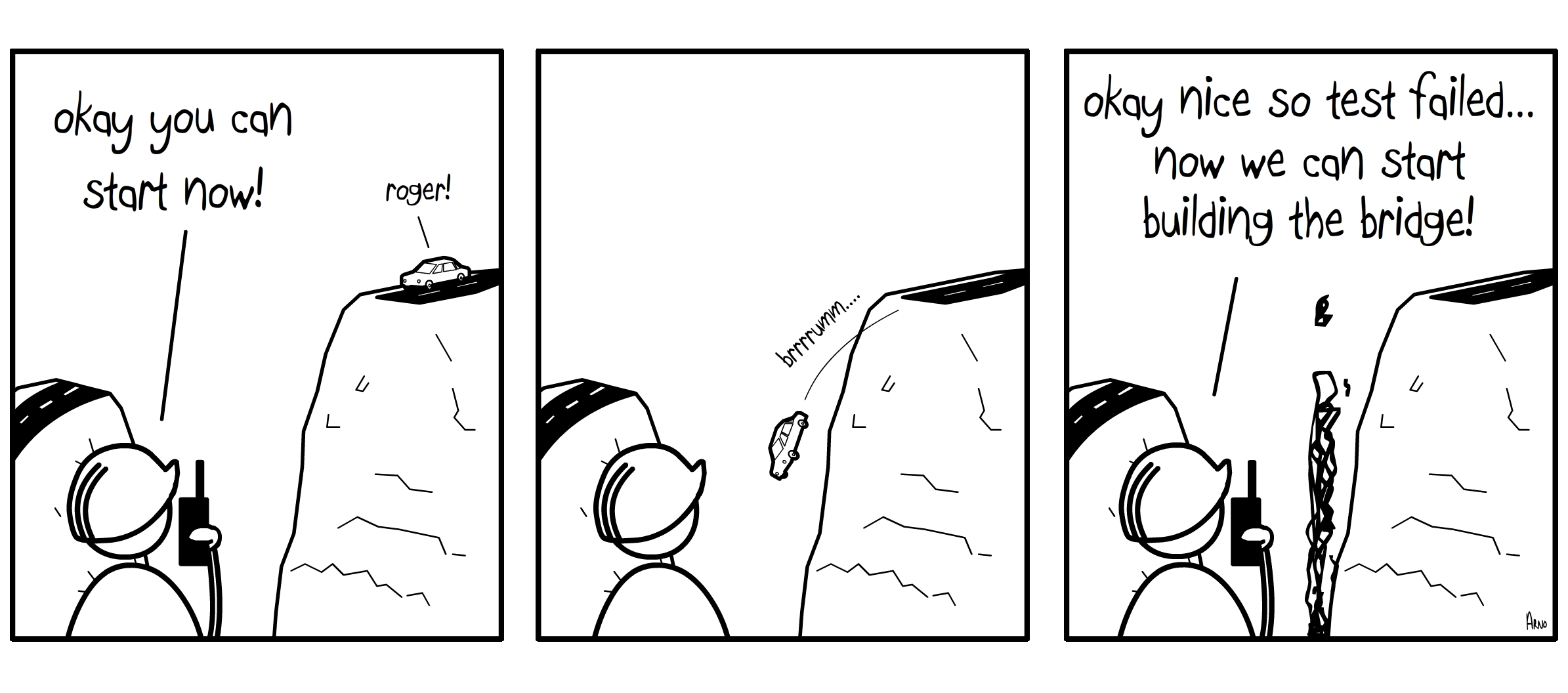

The ultimate goal with TDD is to write tests before writing any implementation code.

Ideally, when adding a few feature, the first step is to write a failing spec with the desired end behavior, and then write the corresponding code that makes the test pass.

TDD is a hard practice to strictly follow because it is so tempting to write the implementation first. Setting up tests can be tedious and time-consuming, and it usually feels easier to just write the tests later. But writing specs after the implementation removes a lot of benefits of writing tests in the first place.

Our First TDD Application

Ideally, a test suite is developed and maintained throughout the life of an application. But that's not always the case. Testing an existing application can be intimidating if there is no framework in place. Imagining the thousands of lines of untested code can feel like a steep barrier to entry, and that was often what prevented me from wanting to write the initial tests for an application that had been around for several years.

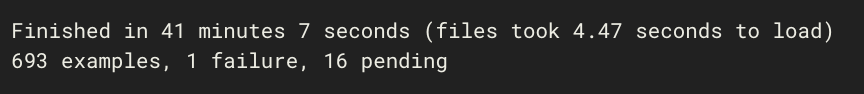

Conveniently, we began a new project in the fall of 2018, right around the time that we were exploring Test Driven Development. Naturally, we started the project with a goal of 100% test coverage. For every line of code we wrote, there was a corresponding test.

In theory, this was a great idea. In reality, not so much. Writing tests for every line of code more than doubled our development time and often caused frustration because:

- We were fairly new to writing specs, and the code for tests was often more difficult and more time consuming to write than the code we were testing.

- Similar to the first point, we didn’t have a strong understanding of the tools we were using, like FactoryBot. This caused a lot of tests to fail not because our code was wrong, but because the tests were incorrectly configured.

- Some features didn’t feel like they needed to be tested, but we had committed ourselves to 100% test coverage. The minuscule features and specs felt tedious and not worth the time they took to write.

As the application grew in complexity, so did our tests. And since the foundation of our test suite was built on our initial limited knowledge of testing, our tests were slow and fragile.

The Second Attempt

As a result of the frustration from our first TDD experience, we went into our next project with a different mindset about testing. We decided that 100% code coverage was unrealistic. Instead, we decided to only test the core features.

Our guiding principle is that if the code is custom, complex, or important, there should be a test for it.

Scoping down the test coverage turned out to be a great decision. Specs were written for important pieces of code and skipped for the obvious methods like:

def human_description

"An important object."

endIn addition to reducing the number of tests written, our spec writing and Factory building skills had improved exponentially. Our tests became stronger and more detailed, which led to stronger code.

Benefits of Writing Tests

One of the unexpected benefits of adding tests to our development process has been identifying bugs in data provided to us by clients.

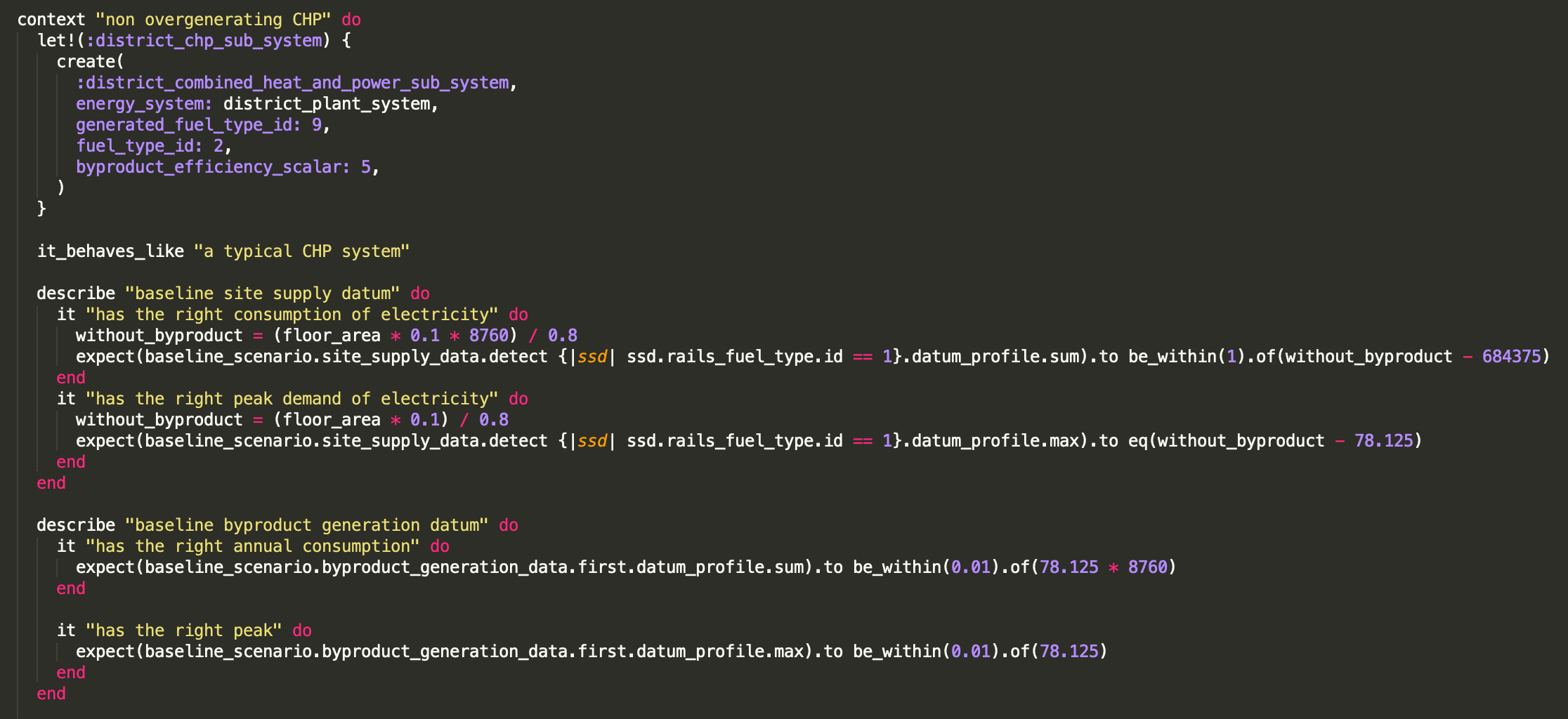

Some of our development work involves replacing an existing tool or complicated Excel workflow with a custom software solution. With one of our newer projects, we wrote tests to validate our that our application outputs matched the results from the existing tool. There have been multiple cases where our tests have failed because the old tool or Excel formula was incorrect and produced incorrect results.

Without tests, the bug may not have ever been discovered!

There are plenty of other things that we like about writing tests:

- Tests allow us to feel more comfortable refactoring code. If we have tests in place that pass, we can safely make major changes, knowing that the refactor is complete when the tests pass again.

- It makes us write cleaner, more maintainable code. Writing tests for functions that are more than a dozen lines is a nightmare. It’s not uncommon to have a difficult time writing tests, only to realize later down the line that the code needed to be refactored anyway.

- Implementing new features with TDD is a breeze now that we feel comfortable stubbing objects and writing tests. Given the desired behavior, we are able to write failing specs for a feature and then develop an implementation that will eventually give us green tests. This method will get us 90% of the way through a new feature. As a bonus, we know that it behaves as expected in most scenarios, and it is protected by future features and refactors.

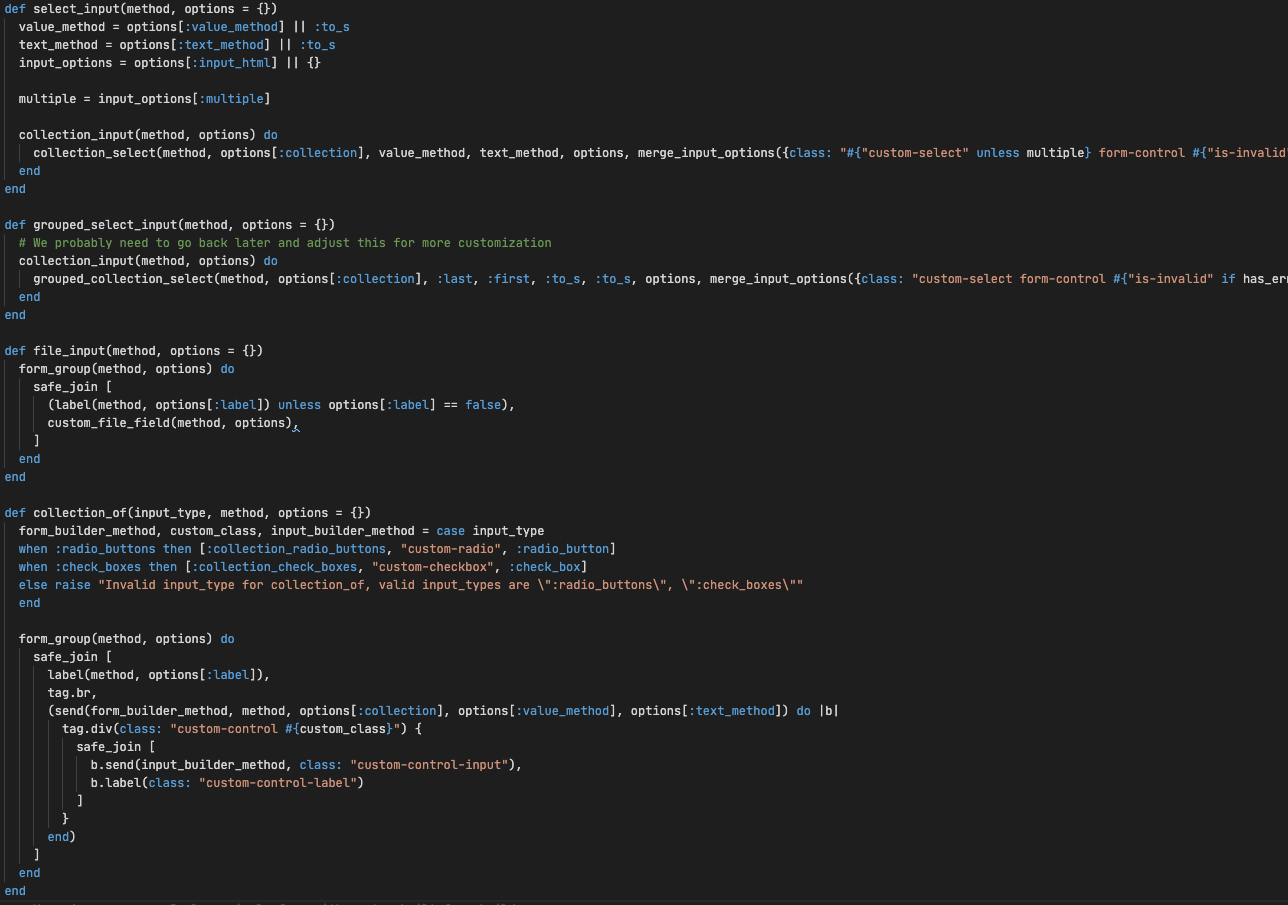

Our Testing Workflow

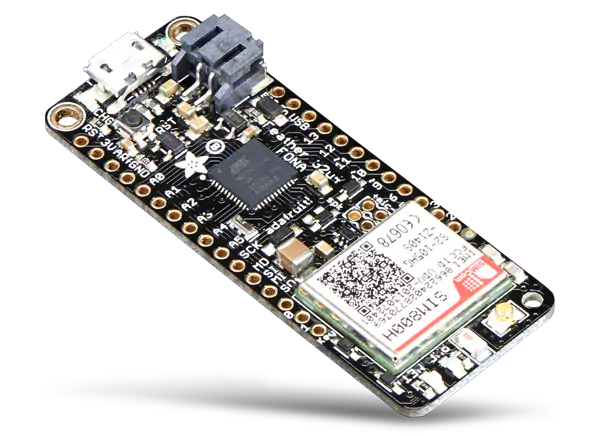

We use RSpec to write tests and Factory Bot to stub objects. Each commit runs CircleCI to make sure that the new features we develop maintain functionality in all parts of the application. Throughout our projects, we keep our testing process consistent to allow for smooth transitions when developers switch to work on another project.

Factories are an alternative to fixtures, which is the default way to stub objects. They allow us to build or create valid objects in a test environment, without requiring us to initialize the object and all of the attributes manually for each test. We use Factory Bot in place of fixtures for several reasons:

- Fixtures are just YAML files, so they can be tedious to write, and are error-prone.

- Fixtures are usually all or nothing, meaning you have to create many fixtures to test many different behaviors (this brings us back to tedious). Factory Bot can override attributes on a per-test basis to create the desired variant of a base object.

- Factory Bot allows for more readability in our tests because you have to be more explicit about the object you're creating when writing the actual test.

In general, factories are versatile and allow for relationships, single table inheritance, polymorphism, and more to be defined on each instance of an object.

When we first started our TDD journey, we placed all factories in a single

factories.rb

file. This quickly became difficult to navigate. On future projects, we used a `factories` directory that essentially mirrored our

models

folder, with one file for each class. For example, we would have

factories/users.rb

. In that file, we could have multiple factories for all possible types of users, but there would not be any factories for other types of objects.

Moving Forward

Now that we have gone through the major hurdles of beginning TDD, it will be easier to add tests to existing projects. Over time, I think it will become a regular part of our development process as we begin new projects. Our biggest challenge is finding the optimal level of code coverage that is maintainable and useful. We have made significant improvements since developing our first test suite, and I might also say that tests are now fun to write.