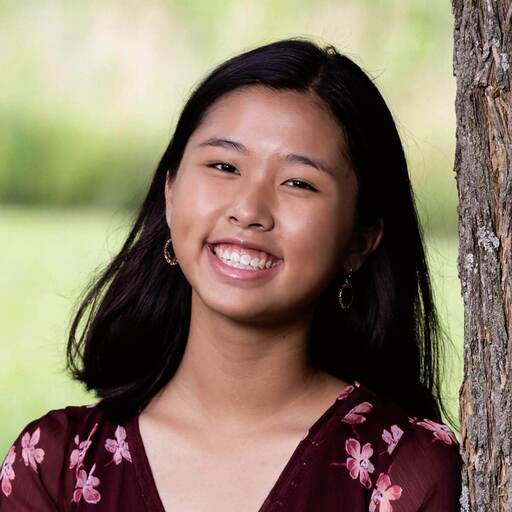

Enter Containers

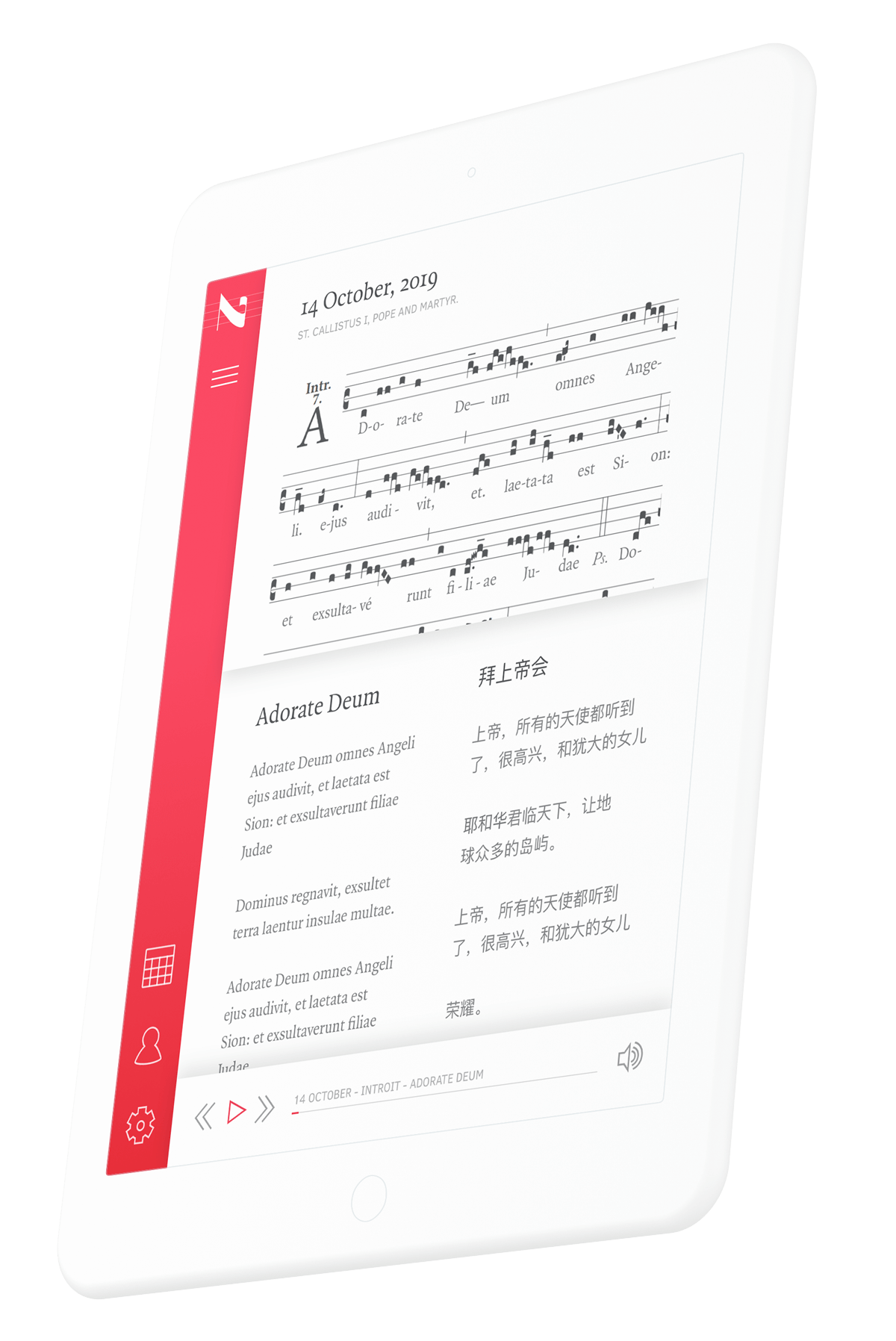

Packaged application software.

May 8, 2019

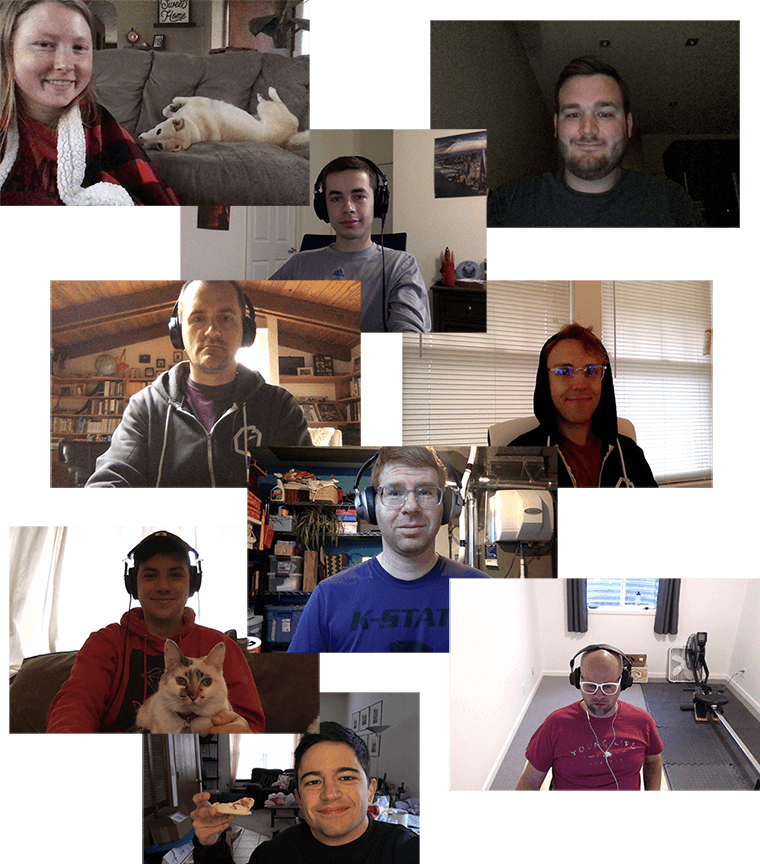

This is Part 2 in our series about our Ruby on Rails DevOps stack. In the last blog post we covered early methods of deploying our Rails app. As our team has grown from 2 to 8 people and the number of Rails apps we are developing and deploying has risen from just a few to dozens, we were consistently running into issues where our different Rails environments were relying on different versions of system libraries.

Or an update on in one project would cause errors on an unrelated project.

Or changing a system package would require us to recompile Ruby.

Oof.

Setting up a new developer on each of our projects usually required an existing developer on the project to sit down and walk through all of the various tools and services that needed to be installed to run that project, and then help fix any conflicts that came up while installing those tools.

We wanted a conflict-free, repeatable system for development and deployment - where any developer could get their development environment set up and deploy the application in a little amount of time quickly.

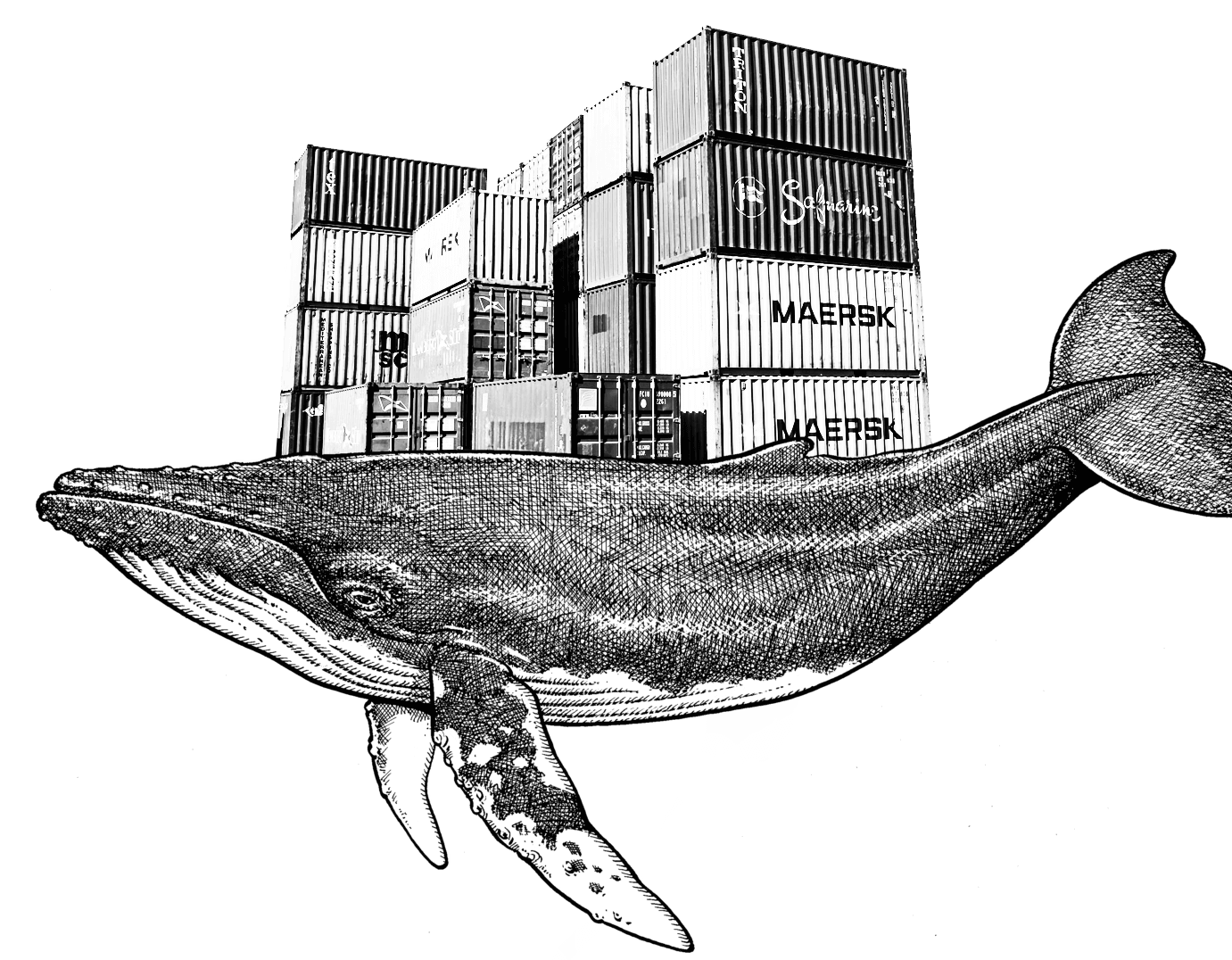

Containers

When we're talking about a Rails project, we're not just talking about the application server that runs the code. We're also talking about database storage, a caching service like Redis or Memcached, perhaps a search service like Elasticsearch. On top of git clone-ing the repository, a developer needs each of these services set up on their machine to run a project. Similarly, the production servers need all of these services as well.

Containers allow us to create discrete pieces of software that are packaged in a composable format. Containers are like a lightweight virtual machine running one piece of software on your computer. They have their operating system, their own network, their own filesystem, everything they need for your software to treat them as their own machine, separate from your computer. What's more is you can run different versions of services depending on what is needed (port conflicts not withstanding). For one project maybe Redis 4.0 is needed, but on a different project Redis 5.0 is what we want. Containers allow us to do that.

The software we use to run and orchestrate our containers on our development machines is Docker. The Docker engine is the software that runs the containers and abstracts away all the parts of filesystem management and network handling. Docker Compose is a piece of software that allows you to define a set of containers you want to run and easily bring them up and down.

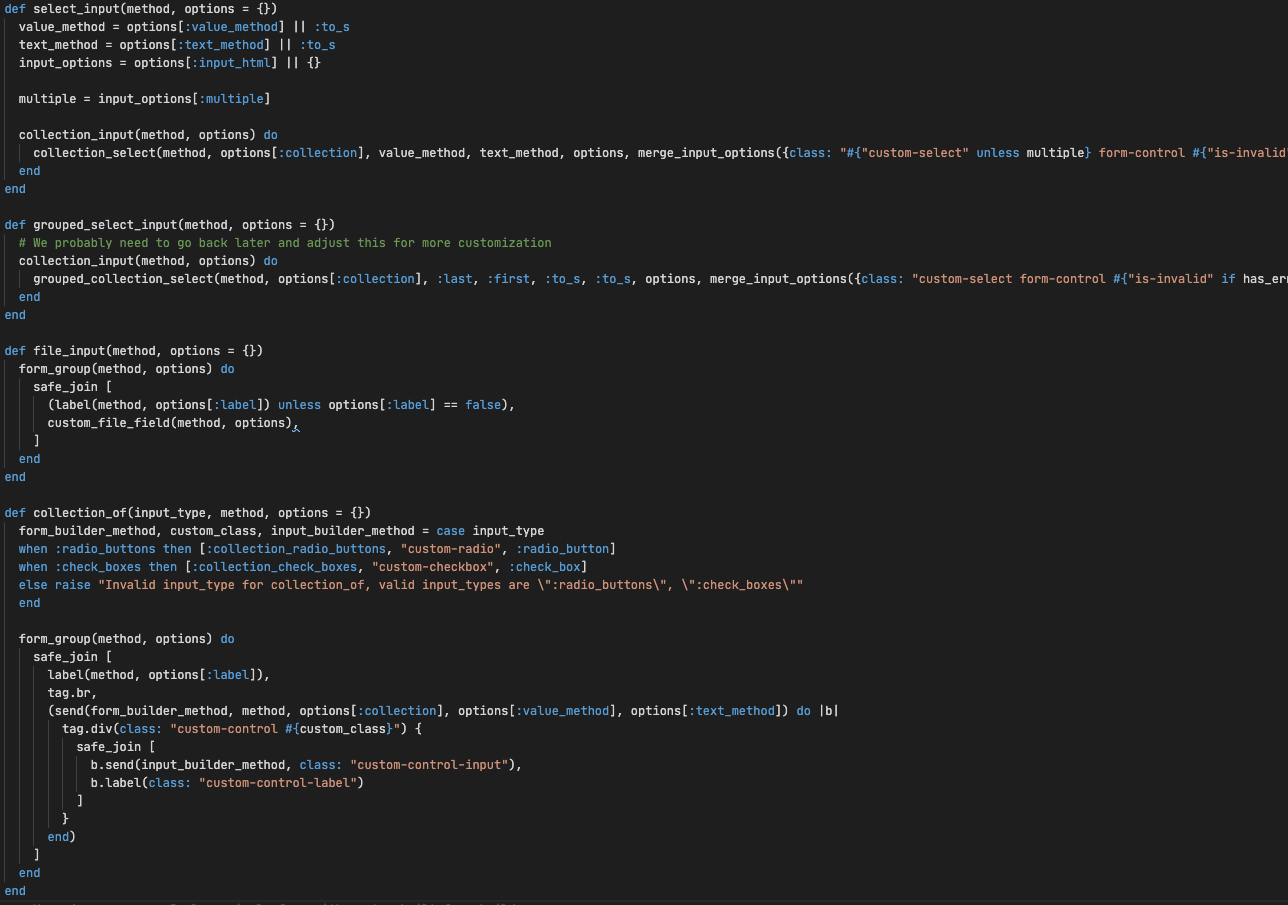

version: "3"

services:

app:

image: brandnewbox/bnb-ruby:2.5-postgresql

command: bundle exec nanoc live -o 0.0.0.0 -p 3000

env_file: .docker-development-vars

volumes:

- .:/app

- bundle_cache:/usr/local/bundle

ports:

- "3000:3000"

networks:

- standard

volumes:

bundle_cache:

networks:

standard:Docker and Docker Compose have completely changed the way we set up new developers on our projects. Instead of us documenting each service that an application needs in the README.md, our docker-compose.yml file acts as a manifest for the services a project needs.

Whereas before a developer would need someone who already had the project set up to help get their project set up, now every application we run (including this website!) can be checked out of the repository and the same commands are run on it.

docker-compose run app bundle install

docker-compose up

...

...

The one service we found that was more trouble than it was worth was setting up the database inside of Docker. Because of the number of projects we work on it's possible to need to switch between many projects or at least reference many projects at the same time. It was not possible to access multiple application databases at the same time if they were each running on their own database server inside the docker container or in order to access a project's database we had to be running the Docker Compose file, neither of these things fit our workflow. So instead, each of our developers run a PostgreSQL server and MySQL server on their machines and we connect to it from inside Docker using the special "host.docker.internal" hostname to access services on the host machine from inside of Docker. This is similar to the idea of using a hosted database solution where your database is managed outside of containers in production, we just took the same idea and applied it to our development!

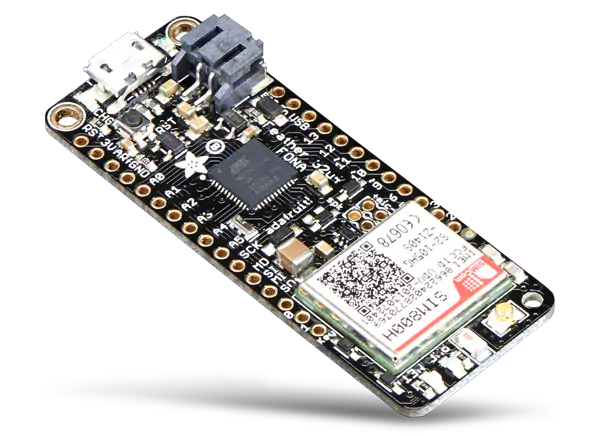

BNB Ruby

After establishing containers as a best practice we wanted to standardize the base that an application was built on. We surveyed our applications and found that most of them had a database (either PostgreSQL or MySQL), most did image processing and so they required the ImageMagick tools, and most did asset compilation with NodeJS. We found out we could standardize those things and make a base container image that ran across all of our applications.

The solution we came up with was bnb-ruby. We have a version of it for each version of Ruby we use along with variants that have PostgreSQL or MySQL clients installed. Now all of our applications rely on this base image which a few customized versions of it for projects that needed extra software packages!

Come back for part 3 of our series where we'll discuss how we get our application code into our container images for production!