Large Arrays in Rails

Benchmarking PostgreSQL's Storage of Arrays

September 16, 2019

One of our current projects came with a challenging requirement: it needed to store and process thousands of (non-sequential) years worth of information stored at the hourly level.

This kind of hourly data is often referred to as 8760 data, referring to the number of hours in a year.

Hopefully at some point in the future we will talk about some of the ways we tackled the processing of so much data, but for now I want to share a few of the steps we used to solve the storage problem.

We primarily use PostgreSQL as our database and want to explore the different ways we could use it to store large arrays. Our primary concern is efficiency due to the sheer byte-size of the data being stored. The 8760 data tips the scale around 12 kB per row. That is 10-50x the size of any other row in our application database!

Requirements

-

We need to be able to store arrays containing the following data types:

- boolean

- float

- integer

- string

- We do not care about storing arrays containing any date or time data

- We do not care about storing arrays containing text

- We need to store arrays that contain 8760 items

- The arrays will contain all of the same data type (no mix-n-match)

- We want the data to come back as the same data type it was stored as

Goals

The application is read-intensive, so we are going to focus on finding the fastest database read times so the application feels snappy during the most common use cases.

But, because we still care about the capturing data in a timely manner, we also want to make sure that the write times are acceptable.

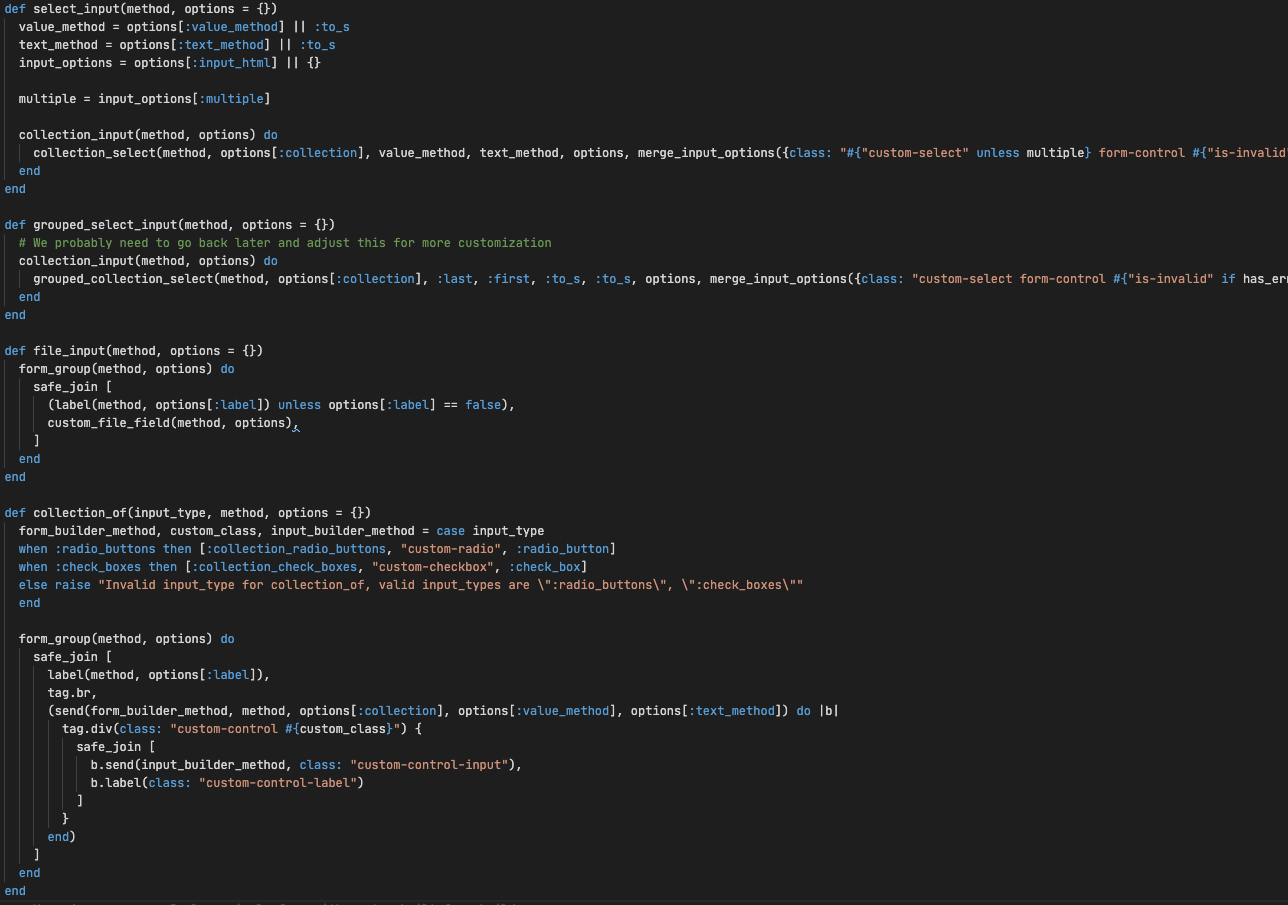

Using PostgreSQL's Arrays

We started by using PostgreSQL arrays for specific data types, which Rails supports out-of-the-box. First we wrote a migration that allowed us to try writing to the different data types:

class CreateHourlyValues < ActiveRecord::Migration[6.0]

def change

create_table :hourly_values do |t|

t.boolean :boolean, array: true, default: []

t.float :float, array: true, default: []

t.integer :integer, array: true, default: []

t.string :string, array: true, default: []

t.timestamps

end

end

endDatabase Array Write Benchmarks

Then we wrote a benchmark for writing data to the database. I've tried to normalize the amount of data being written by using the same length of information (754619980) across all data types.

require "benchmark"

results = []

data_types = [

{name: "Boolean", value: true, column: :boolean},

{name: "String", value: "754619980", column: :string},

{name: "Float", value: 0.75461998, column: :float},

{name: "Integer", value: 754619980, column: :integer},

]

data_types.each do |data_type|

results << data_type[:name]

params = {}

params[data_type[:column]] = Array.new(8760, data_type[:value])

results << Benchmark.measure {

25.times do

HourlyValue.create(params)

end

}

end

results.each do |r|

puts r

endRun it!

bundle exec rails runner spec/support/postgres_array_performance.rbGives us about the same performance across all data types.

Boolean

1.320000 0.160000 1.480000 ( 1.883358)

String

1.380000 0.100000 1.480000 ( 2.041565)

Float

1.630000 0.240000 1.870000 ( 2.403963)

Integer

1.770000 0.150000 1.920000 ( 2.327076)Database Array Read Benchmarks

Re-writing the benchmark above:

data_types.each do |data_type|

results << data_type[:name]

params = {}

params[data_type[:column]] = Array.new(8760, data_type[:value])

hv = HourlyValue.create(params)

results << Benchmark.measure {

100.times do

r = HourlyValue.find(hv.id)

end

}

endGives us some interesting results. Namely: that the float data type is noticeably slower than it's peers.

Boolean

1.340000 0.120000 1.460000 ( 1.642357)

String

1.640000 0.130000 1.770000 ( 2.082916)

Float

2.220000 0.070000 2.290000 ( 3.094243)

Integer

1.380000 0.190000 1.570000 ( 1.839548)

What about JSON?

On a whim, let's try things with PostgreSQL's JSON objects. So here's our migration:

class AddJsonToHourlyValues < ActiveRecord::Migration[6.0]

def change

add_column :hourly_values, :json, :json

add_column :hourly_values, :jsonb, :jsonb

end

endDatabase JSON Write Benchmarks

json_types = [

{name: "JSONB", column: :jsonb},

{name: "JSON", column: :json},

]

json_types.each do |json_type|

data_types.each do |data_type|

results << "#{json_type[:name]}/#{data_type[:name]}"

params = {}

params[json_type[:column]] = Array.new(8760, data_type[:value])

results << Benchmark.measure {

25.times do

HourlyValue.create(params)

end

}

end

end

The two are relatively comparable:

json/Boolean

1.140000 0.190000 1.330000 ( 1.653640)

json/String

2.540000 0.190000 2.730000 ( 3.164868)

json/Float

1.800000 0.090000 1.890000 ( 2.298632)

json/Integer

0.920000 0.230000 1.150000 ( 1.632565)

jsonb/Boolean

0.880000 0.110000 0.990000 ( 1.231931)

jsonb/String

2.770000 0.220000 2.990000 ( 3.602848)

jsonb/Float

1.710000 0.110000 1.820000 ( 2.387314)

jsonb/Integer

0.930000 0.150000 1.080000 ( 1.567992)Database JSON Read Benchmarks

json_types.each do |json_type|

data_types.each do |data_type|

results << "#{json_type[:name]}/#{data_type[:name]}"

params = {}

params[json_type[:column]] = Array.new(8760, data_type[:value])

hv = HourlyValue.create(params)

results << Benchmark.measure {

100.times do

r = HourlyValue.find(hv.id)

end

}

end

end

The results hint at JSON being slightly faster than JSONB. This might be due to the fact that JSONB supports more features and thus requires more lifting to serialize / deserialize data.

JSONB/Boolean

1.110000 0.250000 1.360000 ( 1.832779)

JSONB/String

2.890000 0.140000 3.030000 ( 3.596975)

JSONB/Float

1.720000 0.110000 1.830000 ( 2.379918)

JSONB/Integer

0.990000 0.110000 1.100000 ( 1.822468)

JSON/Boolean

0.950000 0.050000 1.000000 ( 1.197806)

JSON/String

2.920000 0.170000 3.090000 ( 3.575563)

JSON/Float

1.770000 0.230000 2.000000 ( 2.495976)

JSON/Integer

0.930000 0.100000 1.030000 ( 1.504159)Conclusion

Storing the data as JSON gives us slightly faster read times than storing the data as a data-type-specific array. It also gives us the huge bonus of not having to worry about what kind of data we are storing. The data comes out just like it went in!

| Data Type | Array | JSON |

|---|---|---|

| Boolean | 1.642357 | 1.197806 |

| String | 2.082916 | 3.575563 |

| Float | 3.094243 | 2.495976 |

| Integer | 1.839548 | 1.504159 |

We implemented this data store almost a year ago, and since then the application has been used to collect and store over seventeen thousand records. That is an hour-by-hour recap of history since the settlement of the Americas!